The operation of and the behavior of MACvlan and IPvlan drivers are very familiar to network engineers. Two such underlay drivers are media access control virtual local area network (MACvlan) and internet protocol VLAN (IPvlan). Underlay network drivers expose host interfaces (i.e., the physical network interface at eth0) directly to containers or VMs running on the host. Containers on the same host that are connected to two different overlay networks are not able to communicate with each other via the local bridge - they are segmented from one another. Overlays focus on the cross-host communication challenge. If you’re doing container orchestration, you’ll already have a distributed key-value store lying around. Some overlays rely on a distributed key-value store. Multi-host networking requires additional parameters when launching the Docker daemon, as well as a key-value store. The support for public clouds is particularly key for overlay drivers given that among others, overlays best address hybrid cloud use cases and provide scaling and redundancy without having to open public ports. Each of the cloud provider tunnel types creates routes in the provider’s routing tables, just for your account or virtual private cloud (VPC).

It supports udp, vxlan, host-gw, aws-vpc or gce. With the introduction of this capability, Docker chose to leverage HashiCorp’s Serf as the gossip protocol, selected for its efficiency in neighbor table exchange and convergence times.įor those needing support for other tunneling technologies, Flannel may be the way to go.

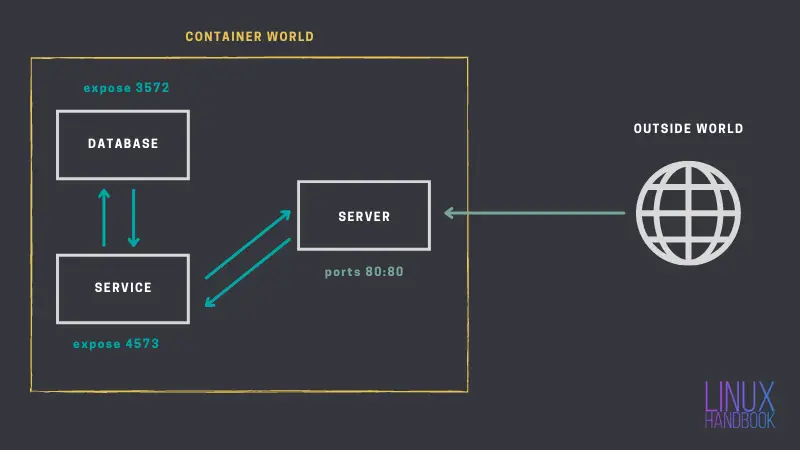

VXLAN has been the tunneling technology of choice for Docker libnetwork, whose multi-host networking entered as a native capability in the 1.9 release. Many tunneling technologies exist, such as virtual extensible local area network (VXLAN). This allows containers to behave as if they are on the same machine by tunneling network subnets from one host to the next in essence, spanning one network across multiple hosts. Overlays use networking tunnels to deliver communication across hosts. Sometimes referred to as native networking, host networking is conceptually simple, making it easier to understand, troubleshoot and use. In other words, if the framework does not specify a network type, a new network namespace will not be associated with the container, but with the host network. Host networking is the default type used within Mesos. While the container has access to all of the host’s network interfaces, unless deployed in privilege mode, the container may not reconfigure the host’s network stack. In this approach, a newly created container shares its network namespace with the host, providing higher performance - near metal speed - and eliminating the need for NAT however, it does suffer port conflicts. While bridged networks solve port-conflict problems and provide network isolation to containers running on one host, there’s a performance cost related to using NAT. NAT is used to provide communication beyond the host.

Both the rkt and Docker container projects provide similar behavior when None or Null networking is used. It does, however, receive a loopback interface. None is straightforward in that the container receives a network stack, but lacks an external network interface. In this post we will learn briefly about various networking modes available for Docker containers and deep dive into Host Mode networking. This is not only important from the perspective of service communication but also forms an important aspect of infrastructure security. It is essential for us to understand how container networking works.

0 kommentar(er)

0 kommentar(er)